If you have ever played a 3D video game, used Photoshop, or run a Google Search, you have used Matrix Multiplication. It is the engine that drives modern computing.

Students hate it because it feels like mindless arithmetic: "Multiply this row by that column, add them up, repeat 50 times." But if you understand the geometry behind it, it becomes intuitive. Matrices are not just grids of numbers; they are transformation machines.

1. The "Row by Column" Rule

Why do we multiply rows by columns? why not row-by-row?

Think of Matrix A as the Transformation and Matrix B as the Data.

If you want to apply a transformation to a list of points (like rotating a 3D character), each column of B is a point (x, y, z). Each row of A tells you how to mix those inputs to get the new output.

[ New Y ] = [ c d ] * [ Old Y ]

• New X = (a * Old X) + (b * Old Y)

• New Y = (c * Old X) + (d * Old Y)

This is a Dot Product. It measures how much one vector aligns with another. It allows us to calculate thousands of new positions in a split second.

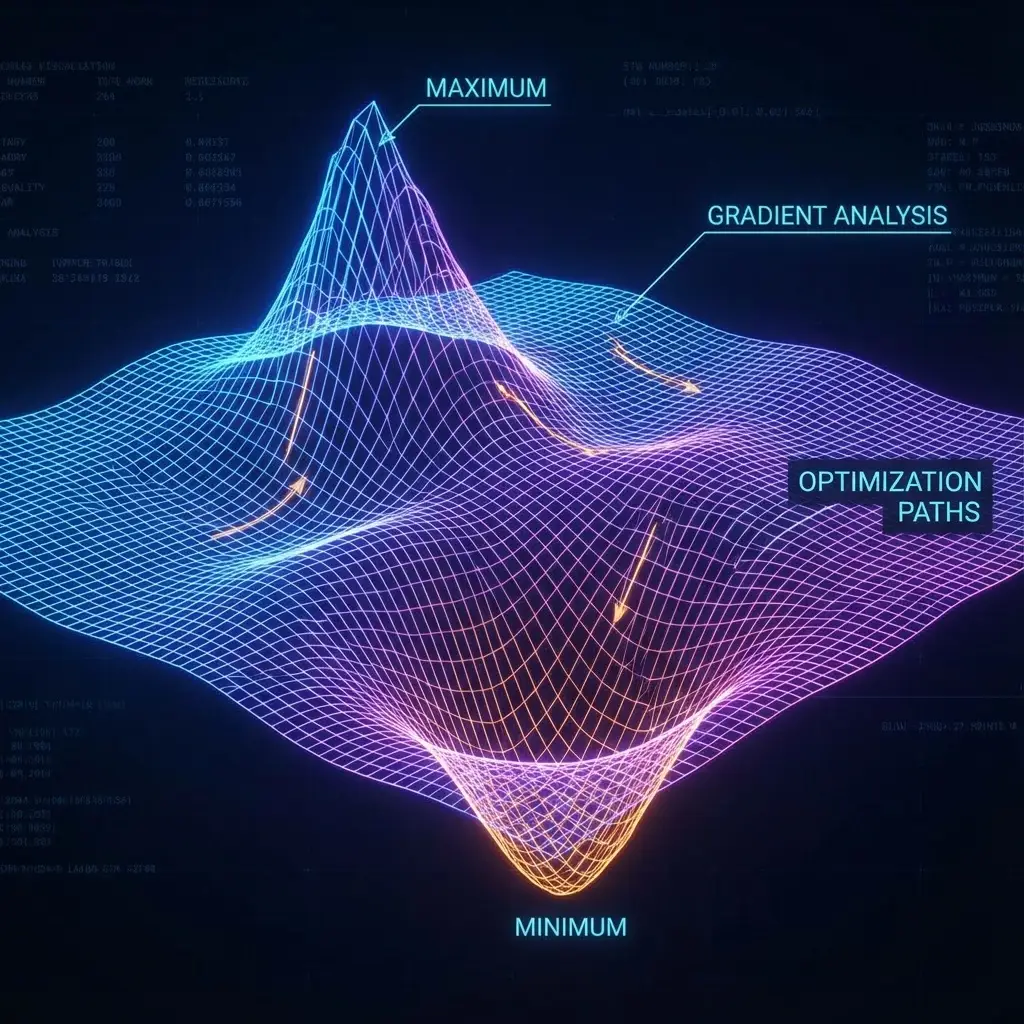

2. Transforms: Scale, Rotate, Shear

Every 2x2 matrix describes a specific way to warp space.

- Identity: [1 0; 0 1]. Does nothing.

- Scale: [2 0; 0 2]. Doubles the size of everything.

- Rotate 90°: [0 -1; 1 0]. Turns everything sideways.

- Shear: [1 1; 0 1]. Slants everything to the right (like italic text).

By multiplying these matrices together, you can combine actions. Rotate * Scale * Shear = One single matrix that does all three at once. This is why graphics cards are so fast.

3. The Order Matters (Non-Commutative)

In normal math, 5 * 3 is the same as 3 * 5.

In Matrix math, A * B is NOT B * A.

Imagine "A" is "Put on your socks" and "B" is "Put on your shoes".

• A * B = Socks then Shoes (Correct).

• B * A = Shoes then Socks (Superman mode).

The order of operations changes the result. This connects to Quantum Mechanics, where measuring position and then momentum gives a different result than momentum and then position.

4. Real World Application: Neural Networks

Artificial Intelligence (like ChatGPT or DeepMind) is basically Matrix Multiplication on steroids.

The "Brain" of an AI is a massive matrix of "Weights". The input (your text or image) is a vector. Output = Activation(Weight_Matrix * Input_Vector + Bias)

When an AI "learns", it is just using Calculus to slightly adjust the numbers in that matrix so that the multiplication yields the correct answer (Cat vs Dog). Training a model is just finding the right matrix.

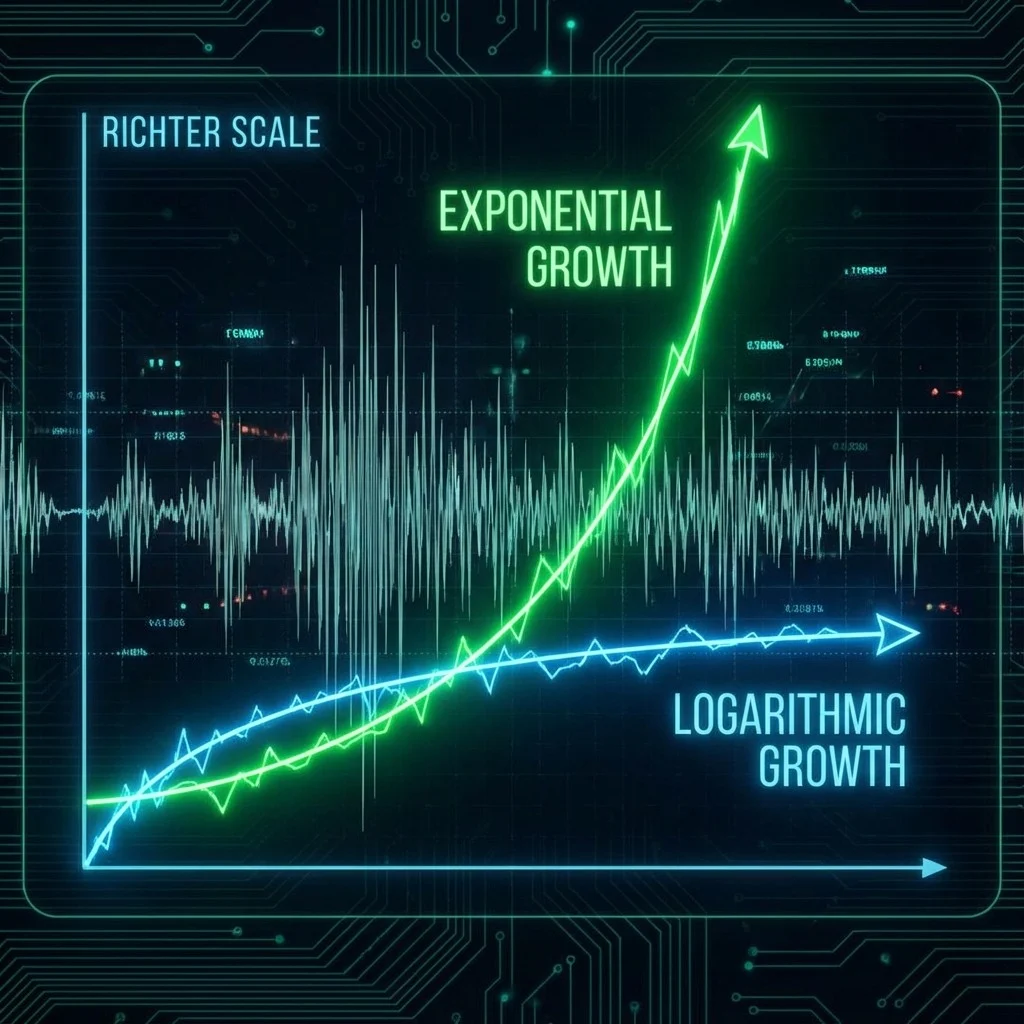

5. PageRank (Google's Billion Dollar Algorithm)

How does Google know which websites are important? They represent the entire internet as a Matrix!

If Site A links to Site B, the matrix has a '1' in that spot. By repeatedly multiplying this matrix by a probability vector (a process called finding the Eigenvector), they can mathematically rank the \"authority\" of every page on the web. It's pure Linear Algebra.

6. FAQ

Q: Can I multiply ANY two matrices?

A: No. The inner dimensions must match. (m x n) * (n x p). The number of columns in the first must equal the rows in the second.

Q: What is the Identity Matrix?

A: It's a matrix with 1s on the diagonal and 0s everywhere else. It acts like the number '1'. A * I = A.

Conclusion

Matrix multiplication is the language of structure. It allows us to manipulate 3D worlds, solve million-variable equations, and build intelligent systems. It is the bridge between static data and dynamic action.